Like everyone else, I’ve been having a bit of a play with ChatGPT. There have been some nice examples recently of people asking it questions and getting answers that are grammatically correct, well-presented, completely plausible, and completely wrong.

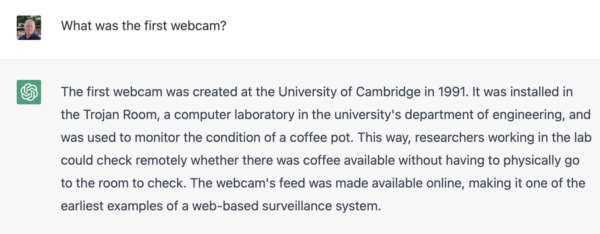

That didn’t happen to me. There was one question I felt I ought to ask it, and it gave a mostly correct response, though its description of the Trojan Room wasn’t quite right. Overall, though, not bad. But it was the last sentence that took me by surprise:

Well, yes, I suppose it was a surveillance system, though no human has used that phrase to me before when describing it!

Perhaps it’s only natural, though, that a machine should think of things chiefly from the point of view of the coffee pot?