One of the very valuable things to come out of large data centres is large-scale reliability statistics. I’ve written before about my suspicions that my Seagate drives weren’t as reliable as they might be, but I had insufficient data for this to be anything other than anecdotal.

One of the very valuable things to come out of large data centres is large-scale reliability statistics. I’ve written before about my suspicions that my Seagate drives weren’t as reliable as they might be, but I had insufficient data for this to be anything other than anecdotal.

And then a couple of weeks ago, I pulled a couple of old 2.5″ drives off a shelf — Western Digital ones, I think — intending to reuse them for backups. They both span up, but neither would work beyond that.

So I was very interested by this Backblaze blog post which discusses their experience with a few thousand more drives than I have at my disposal. They use consumer-grade drives, and are very price-sensitive.

A quick summary:

Some quotes:

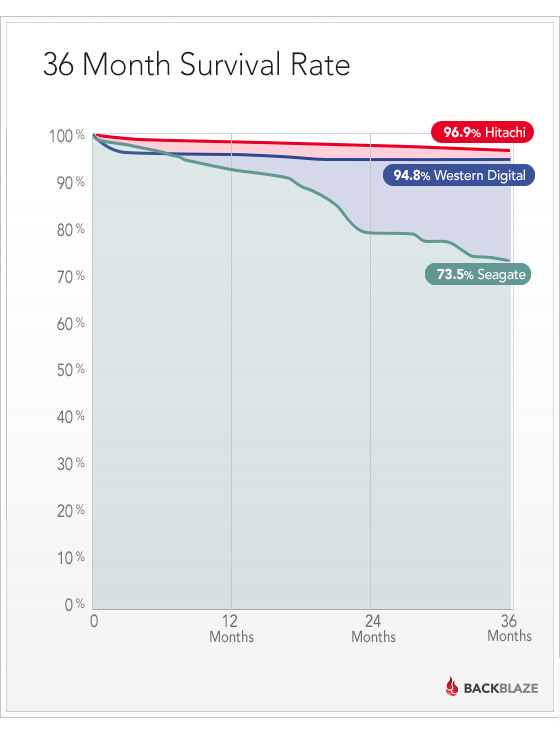

Hitachi does really well. There is an initial die-off of Western Digital drives, and then they are nice and stable. The Seagate drives start strong, but die off at a consistently higher rate, with a burst of deaths near the 20-month mark.

Having said that, you’ll notice that even after 3 years, by far most of the drives are still operating.

Yes, but notice, too, that if you have four computers with Seagate drives, you should not expect the data on one of them to be there in three years’ time. And, quite possibly, not there by the Christmas after next.

The drives that just don’t work in our environment are Western Digital Green 3TB drives and Seagate LP (low power) 2TB drives. Both of these drives start accumulating errors as soon as they are put into production. We think this is related to vibration.

and

The good pricing on Seagate drives along with the consistent, but not great, performance is why we have a lot of them.

If the price were right, we would be buying nothing but Hitachi drives. They have been rock solid, and have had a remarkably low failure rate.

and

We are focusing on 4TB drives for new pods. For these, our current favorite is the Seagate Desktop HDD.15 (ST4000DM000). We’ll have to keep an eye on them, though.

Excellent stuff, and worth reading in more detail, especially if longevity is important to you. It’s tempting to fill old drives with data and put them on the shelf as archival backups, but this would suggest that you should only use new drives for that!

Oh, and if you’re wondering about which SSDs to buy, this report suggests that Intel ones are pretty good.

Update: Thanks to Dominic Plunkett for the Backblaze link, and for Rip Sohan for a link in the comments to the TweakTown article that attempts (with some, but not a great deal, of success) to debunk some of this. The previous article I mentioned above links to an older Google study which didn’t distinguish between manufacturers and models, but did say that there was a correlation between them and the failure rates. It also catalogued failure rates not too dissimilar to the Backblaze ones after 3 or so years, so the general implication for home archiving remains!

I’ve been thinking a lot about backups recently – and making some electronic scribblings on the subject – but you write more quickly than I do, Quentin, and more concisely! This Backblaze blog post is a good read – it’s nice to see such a large sample size. Thanks for sharing. Here’s a recent article that led me to re-evaluate a few assumptions on the subject of long-term backups: http://arstechnica.com/information-technology/2014/01/bitrot-and-atomic-cows-inside-next-gen-filesystems/

Yes, that’s good!

I’ve long wanted to set up a ZFS-based server with a reasonable number of disks attached; the FreeNAS distribution seems to be the gold standard at present. Before too long, something btrfs-based may be even better.

But the thing that’s stopped me has been that, for ZFS to work well, you need a reasonably powerful machine with plenty of RAM. It’s not something to throw together on an elderly Pentium with a gig of RAM. And when you’ve bought such a machine, you’ll have paid the same as, say, a Drobo 5N. The FreeNAS machine will probably be faster, and less proprietary, and more flexible, and would almost certainly have better protection against bit rot. But it will also be bigger, noisier, use more power, and take a great deal more time to set up and manage.

Still, it would be fun…

I managed to hit “save” on my (probably too lengthy) writings about backups. Link below. Personally, I don’t trust myself to run my own last-line-of-defence backup. For things which are personal, irreplaceable but not overly sensitive, I prefer to pay someone else to worry about it. I might be kidding myself but I try to make sure that where possible, I don’t rely 100% on any one system.

http://www.rmorrison.net/mnemozzyne/disembarqing

How long would it take you to upload everything (excluding raw files and so on)? I’m glad I managed it – since once should be enough.

You might want to read this — it appears Backblaze’s methodology was faulty – http://www.tweaktown.com/articles/6028/dispelling-backblaze-s-hdd-reliability-myth-the-real-story-covered/index.html

Hi Rip –

Yes, that’s a good article too, but it doesn’t quite convince me either.

For example, the author points out that Backblaze’s storage chassis has evolved and improved over the last few years to reduce vibration, and asserts that more of the drives in the old models were Seagate’s. But Backblaze are showing at least three years of data for all of the manufacturers, and the newest chassis is less than a year old, which must mean a large number of the other manufacturers’ drives must have been in the old chassis too. The figures might be skewed if they had purchased more Seagates in the past and more Hitachis now for the new chassis, but they do assert that they continue to buy primarily Seagate drives.

Seagate are saying that this is heavy enterprise-class use and they shouldn’t be using consumer drives, but that is true of the other manufacturers too, and while this may not indicate that Seagate’s enterprise drives would be worse than Hitachi’s enterprise drives, it does tend to indicate that the consumer drives are not all equal, and that would, to some degree, justify the link-bait headline 🙂

The Google study mentioned in my earlier post (linked to above) involved 100,000 consumer drives:

http://static.googleusercontent.com/external_content/untrusted_dlcp/research.google.com/en//archive/disk_failures.pdf

They say that:

“Failure rates are known to be highly correlated with drive models, manufacturers and vintages [18]. Our results do not contradict this fact.”

but they decided not to publish the manufacturer- and model-based numbers.

For me, though, the key number is that they observed failure rates roughly in the 6-8% range for each of the 2-year-old, 3-year-old and 4-year old populations, which means that their numbers are at least in the same ballpark as Backblaze’s, and the implications for home archiving, if not for brand choice, are clear!

All the best,

Q